The CData JDBC Driver for Spark implements JDBC standards that enable third-party tools to interoperate, from wizards in IDEs to business intelligence tools. This article shows how to connect to Spark data with wizards in DBeaver and browse data in the DBeaver GUI.

In this first week, you will hear more about the goals of this course. You'll learn about the people and organizations instrumental to building the SQL standard, learn to differentiate between relational databases and flat files, and utilize psql and SQL commands to create, read, update, and delete tables in a PostgreSQL database. DBeaver is certainly an ultimate Universal client which incorporates RDBMS and NoSQL Databases. The GUI is very useful and easy to manipulate all kind of DB queries.DBeaver is remarkably fast and stable. It loads quickly and responds instantaneously. Especially, It is the only client tool for Apache Cassandra NoSQL Database in market. About DBeaver DBeaver is a universal database management tool for everyone who needs to work with data in a professional way. With DBeaver you are able to manipulate with your data like in a regular spreadsheet, create analytical reports based on records from different data storages, export information in an appropriate format.

Create a JDBC Data Source for Spark Data

Follow the steps below to load the driver JAR in DBeaver.

- Open the DBeaver application and, in the Databases menu, select the Driver Manager option. Click New to open the Create New Driver form.

- In the Driver Name box, enter a user-friendly name for the driver.

- To add the .jar, click Add File.

- In the create new driver dialog that appears, select the cdata.jdbc.sparksql.jar file, located in the lib subfolder of the installation directory.

- Click the Find Class button and select the SparkSQLDriver class from the results. This will automatically fill the Class Name field at the top of the form. The class name for the driver is cdata.jdbc.sparksql.SparkSQLDriver.

- Add jdbc:sparksql: in the URL Template field.

Create a Connection to Spark Data

Follow the steps below to add credentials and other required connection properties.

- In the Databases menu, click New Connection.

- In the Create new connection wizard that results, select the driver.

- On the next page of the wizard, click the driver properties tab.

Bbedit markdown editor. Enter values for authentication credentials and other properties required to connect to Spark.

Set the Server, Database, User, and Password connection properties to connect to SparkSQL.

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Spark JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.sparksql.jarFill in the connection properties and copy the connection string to the clipboard.

Below is a typical connection string:

jdbc:sparksql:Server=127.0.0.1;

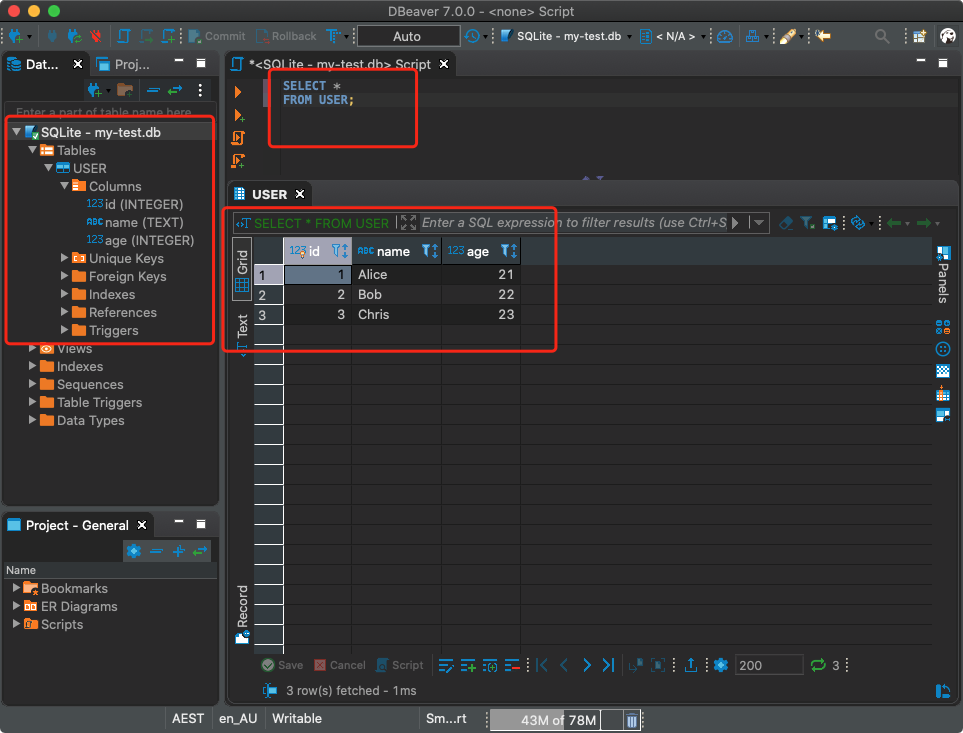

Query Spark Data

Dbeaver Python Code

You can now query information from the tables exposed by the connection: Right-click a Table and then click Edit Table. The data is available on the Data tab.

Dbeaver Python Tutorial

- Dark theme support was improved (Windows 10 and GTk)

- Data viewer:

- Copy As: format configuration editor was added

- Extra configuration for filter dialog (performance)

- Sort by column as fixed (for small fetch sizes)

- Case-insensitive filters support was added

- Plaintext view now support top/bottom dividers

- Data editor was fixed (when column name conflicts with alias name)

- Duplicate row(s) command was fixed for multiple selected rows

- Edit sub-menu was returned to the context menu

- Columns auto-size configuration was added

- Dictionary viewer was fixed (for read-only connections)

- Current/selected row highlighting support was added (configurable)

- Metadata search now supports search in comments

- GIS/Spatial:

- Map position preserve after tiles change

- Support of geometries with Z and M coordinates was added

- Postgis: DDL for 3D geometry columns was fixed

- Presto + MySQL geometry type support was added

- BigQuery now supports spatial data viewer

- Binary geo json support was improved

- Geometry export was fixed (SRID parameter)

- Tiles definition editor was fixed (multi-line definitions + formatting)

- SQL editor:

- Auto-completion for objects names with spaces inside was fixed

- Database objects hyperlinks rendering was fixed

- SQL Server: MFA (multi-factor authentication) support was added

- PostgreSQL: array data types read was fixed

- Oracle: indexes were added to table DDL

- Vertica: LIMIT clause support was improved

- Athena: extra AWS regions added to connection dialog

- Sybase IQ: server version detection was improved

- SAP ASE: user function loading was fixed

- Informix: cross-database metadata read was fixed

- We migrated to Eclipse 2021-03 platform